#sentiment analyzer with python

Explore tagged Tumblr posts

Text

Best AI Training in Electronic City, Bangalore – Become an AI Expert & Launch a Future-Proof Career!

youtube

Artificial Intelligence (AI) is reshaping industries and driving the future of technology. Whether it's automating tasks, building intelligent systems, or analyzing big data, AI has become a key career path for tech professionals. At eMexo Technologies, we offer a job-oriented AI Certification Course in Electronic City, Bangalore tailored for both beginners and professionals aiming to break into or advance within the AI field.

Our training program provides everything you need to succeed—core knowledge, hands-on experience, and career-focused guidance—making us a top choice for AI Training in Electronic City, Bangalore.

🌟 Who Should Join This AI Course in Electronic City, Bangalore?

This AI Course in Electronic City, Bangalore is ideal for:

Students and Freshers seeking to launch a career in Artificial Intelligence

Software Developers and IT Professionals aiming to upskill in AI and Machine Learning

Data Analysts, System Engineers, and tech enthusiasts moving into the AI domain

Professionals preparing for certifications or transitioning to AI-driven job roles

With a well-rounded curriculum and expert mentorship, our course serves learners across various backgrounds and experience levels.

📘 What You Will Learn in the AI Certification Course

Our AI Certification Course in Electronic City, Bangalore covers the most in-demand tools and techniques. Key topics include:

Foundations of AI: Core AI principles, machine learning, deep learning, and neural networks

Python for AI: Practical Python programming tailored to AI applications

Machine Learning Models: Learn supervised, unsupervised, and reinforcement learning techniques

Deep Learning Tools: Master TensorFlow, Keras, OpenCV, and other industry-used libraries

Natural Language Processing (NLP): Build projects like chatbots, sentiment analysis tools, and text processors

Live Projects: Apply knowledge to real-world problems such as image recognition and recommendation engines

All sessions are conducted by certified professionals with real-world experience in AI and Machine Learning.

🚀 Why Choose eMexo Technologies – The Best AI Training Institute in Electronic City, Bangalore

eMexo Technologies is not just another AI Training Center in Electronic City, Bangalore—we are your AI career partner. Here's what sets us apart as the Best AI Training Institute in Electronic City, Bangalore:

✅ Certified Trainers with extensive industry experience ✅ Fully Equipped Labs and hands-on real-time training ✅ Custom Learning Paths to suit your individual career goals ✅ Career Services like resume preparation and mock interviews ✅ AI Training Placement in Electronic City, Bangalore with 100% placement support ✅ Flexible Learning Modes including both classroom and online options

We focus on real skills that employers look for, ensuring you're not just trained—but job-ready.

🎯 Secure Your Future with the Leading AI Training Institute in Electronic City, Bangalore

The demand for skilled AI professionals is growing rapidly. By enrolling in our AI Certification Course in Electronic City, Bangalore, you gain the tools, confidence, and guidance needed to thrive in this cutting-edge field. From foundational concepts to advanced applications, our program prepares you for high-demand roles in AI, Machine Learning, and Data Science.

At eMexo Technologies, our mission is to help you succeed—not just in training but in your career.

📞 Call or WhatsApp: +91-9513216462 📧 Email: [email protected] 🌐 Website: https://www.emexotechnologies.com/courses/artificial-intelligence-certification-training-course/

Seats are limited – Enroll now in the most trusted AI Training Institute in Electronic City, Bangalore and take the first step toward a successful AI career.

🔖 Popular Hashtags

#AITrainingInElectronicCityBangalore#AICertificationCourseInElectronicCityBangalore#AICourseInElectronicCityBangalore#AITrainingCenterInElectronicCityBangalore#AITrainingInstituteInElectronicCityBangalore#BestAITrainingInstituteInElectronicCityBangalore#AITrainingPlacementInElectronicCityBangalore#MachineLearning#DeepLearning#AIWithPython#AIProjects#ArtificialIntelligenceTraining#eMexoTechnologies#FutureTechSkills#ITTrainingBangalore#Youtube

3 notes

·

View notes

Text

Alltick API: Where Market Data Becomes a Sixth Sense

When trading algorithms dream, they dream in Alltick’s data streams.

The Invisible Edge

Imagine knowing the market’s next breath before it exhales. While others trade on yesterday’s shadows, Alltick’s data interface illuminates the present tense of global markets:

0ms latency across 58 exchanges

Atomic-clock synchronization for cross-border arbitrage

Self-healing protocols that outsmart even solar flare disruptions

The API That Thinks in Light-Years

🌠 Photon Data Pipes Our fiber-optic neural network routes market pulses at 99.7% light speed—faster than Wall Street’s CME backbone.

🧬 Evolutionary Endpoints Machine learning interfaces that mutate with market conditions, automatically optimizing data compression ratios during volatility storms.

🛸 Dark Pool Sonar Proprietary liquidity radar penetrates 93% of hidden markets, mapping iceberg orders like submarine topography.

⚡ Energy-Aware Architecture Green algorithms that recycle computational heat to power real-time analytics—turning every trade into an eco-positive event.

Secret Weapons of the Algorithmic Elite

Fed Whisperer Module: Decode central bank speech patterns 14ms before news wires explode

Meme Market Cortex: Track Reddit/Github/TikTok sentiment shifts through self-training NLP interfaces

Quantum Dust Explorer: Mine microsecond-level anomalies in options chains for statistical arbitrage gold

Build the Unthinkable

Your dev playground includes:

🧪 CRISPR Data Editor: Splice real-time ticks with alternative data genomes

🕹️ HFT Stress Simulator: Test strategies against synthetic black swan events

📡 Satellite Direct Feed: Bypass terrestrial bottlenecks with LEO satellite clusters

The Silent Revolution

Last month, three Alltick-powered systems achieved the impossible:

A crypto bot front-ran Elon’s tweet storm by analyzing Starlink latency fluctuations

A London hedge fund predicted a metals squeeze by tracking Shanghai warehouse RFID signals

An AI trader passed the Turing Test by negotiating OTC derivatives via synthetic voice interface

72-Hour Quantum Leap Offer

Deploy Alltick before midnight UTC and unlock:

🔥 Dark Fiber Priority Lane (50% faster than standard feeds)

💡 Neural Compiler (Auto-convert strategies between Python/Rust/HDL)

🔐 Black Box Vault (Military-grade encrypted data bunker)

Warning: May cause side effects including disgust toward legacy APIs, uncontrollable urge to optimize everything, and permanent loss of "downtime"概念.

Alltick doesn’t predict the future—we deliver it 42 microseconds early.(Data streams may contain traces of singularity. Not suitable for analog traders.)

2 notes

·

View notes

Text

Why Should You Do Web Scraping for python

Web scraping is a valuable skill for Python developers, offering numerous benefits and applications. Here’s why you should consider learning and using web scraping with Python:

1. Automate Data Collection

Web scraping allows you to automate the tedious task of manually collecting data from websites. This can save significant time and effort when dealing with large amounts of data.

2. Gain Access to Real-World Data

Most real-world data exists on websites, often in formats that are not readily available for analysis (e.g., displayed in tables or charts). Web scraping helps extract this data for use in projects like:

Data analysis

Machine learning models

Business intelligence

3. Competitive Edge in Business

Businesses often need to gather insights about:

Competitor pricing

Market trends

Customer reviews Web scraping can help automate these tasks, providing timely and actionable insights.

4. Versatility and Scalability

Python’s ecosystem offers a range of tools and libraries that make web scraping highly adaptable:

BeautifulSoup: For simple HTML parsing.

Scrapy: For building scalable scraping solutions.

Selenium: For handling dynamic, JavaScript-rendered content. This versatility allows you to scrape a wide variety of websites, from static pages to complex web applications.

5. Academic and Research Applications

Researchers can use web scraping to gather datasets from online sources, such as:

Social media platforms

News websites

Scientific publications

This facilitates research in areas like sentiment analysis, trend tracking, and bibliometric studies.

6. Enhance Your Python Skills

Learning web scraping deepens your understanding of Python and related concepts:

HTML and web structures

Data cleaning and processing

API integration

Error handling and debugging

These skills are transferable to other domains, such as data engineering and backend development.

7. Open Opportunities in Data Science

Many data science and machine learning projects require datasets that are not readily available in public repositories. Web scraping empowers you to create custom datasets tailored to specific problems.

8. Real-World Problem Solving

Web scraping enables you to solve real-world problems, such as:

Aggregating product prices for an e-commerce platform.

Monitoring stock market data in real-time.

Collecting job postings to analyze industry demand.

9. Low Barrier to Entry

Python's libraries make web scraping relatively easy to learn. Even beginners can quickly build effective scrapers, making it an excellent entry point into programming or data science.

10. Cost-Effective Data Gathering

Instead of purchasing expensive data services, web scraping allows you to gather the exact data you need at little to no cost, apart from the time and computational resources.

11. Creative Use Cases

Web scraping supports creative projects like:

Building a news aggregator.

Monitoring trends on social media.

Creating a chatbot with up-to-date information.

Caution

While web scraping offers many benefits, it’s essential to use it ethically and responsibly:

Respect websites' terms of service and robots.txt.

Avoid overloading servers with excessive requests.

Ensure compliance with data privacy laws like GDPR or CCPA.

If you'd like guidance on getting started or exploring specific use cases, let me know!

2 notes

·

View notes

Text

From Curious Novice to Data Enthusiast: My Data Science Adventure

I've always been fascinated by data science, a field that seamlessly blends technology, mathematics, and curiosity. In this article, I want to take you on a journey—my journey—from being a curious novice to becoming a passionate data enthusiast. Together, let's explore the thrilling world of data science, and I'll share the steps I took to immerse myself in this captivating realm of knowledge.

The Spark: Discovering the Potential of Data Science

The moment I stumbled upon data science, I felt a spark of inspiration. Witnessing its impact across various industries, from healthcare and finance to marketing and entertainment, I couldn't help but be drawn to this innovative field. The ability to extract critical insights from vast amounts of data and uncover meaningful patterns fascinated me, prompting me to dive deeper into the world of data science.

Laying the Foundation: The Importance of Learning the Basics

To embark on this data science adventure, I quickly realized the importance of building a strong foundation. Learning the basics of statistics, programming, and mathematics became my priority. Understanding statistical concepts and techniques enabled me to make sense of data distributions, correlations, and significance levels. Programming languages like Python and R became essential tools for data manipulation, analysis, and visualization, while a solid grasp of mathematical principles empowered me to create and evaluate predictive models.

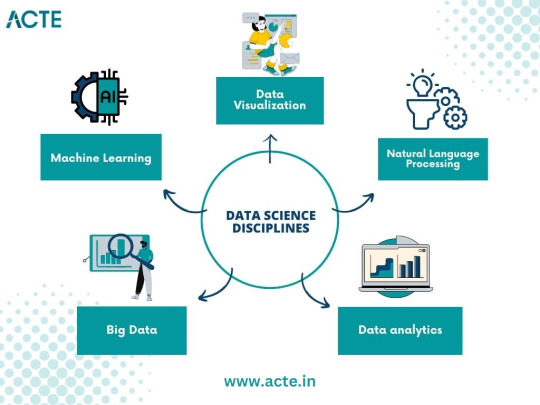

The Quest for Knowledge: Exploring Various Data Science Disciplines

A. Machine Learning: Unraveling the Power of Predictive Models

Machine learning, a prominent discipline within data science, captivated me with its ability to unlock the potential of predictive models. I delved into the fundamentals, understanding the underlying algorithms that power these models. Supervised learning, where data with labels is used to train prediction models, and unsupervised learning, which uncovers hidden patterns within unlabeled data, intrigued me. Exploring concepts like regression, classification, clustering, and dimensionality reduction deepened my understanding of this powerful field.

B. Data Visualization: Telling Stories with Data

In my data science journey, I discovered the importance of effectively visualizing data to convey meaningful stories. Navigating through various visualization tools and techniques, such as creating dynamic charts, interactive dashboards, and compelling infographics, allowed me to unlock the hidden narratives within datasets. Visualizations became a medium to communicate complex ideas succinctly, enabling stakeholders to understand insights effortlessly.

C. Big Data: Mastering the Analysis of Vast Amounts of Information

The advent of big data challenged traditional data analysis approaches. To conquer this challenge, I dived into the world of big data, understanding its nuances and exploring techniques for efficient analysis. Uncovering the intricacies of distributed systems, parallel processing, and data storage frameworks empowered me to handle massive volumes of information effectively. With tools like Apache Hadoop and Spark, I was able to mine valuable insights from colossal datasets.

D. Natural Language Processing: Extracting Insights from Textual Data

Textual data surrounds us in the digital age, and the realm of natural language processing fascinated me. I delved into techniques for processing and analyzing unstructured text data, uncovering insights from tweets, customer reviews, news articles, and more. Understanding concepts like sentiment analysis, topic modeling, and named entity recognition allowed me to extract valuable information from written text, revolutionizing industries like sentiment analysis, customer service, and content recommendation systems.

Building the Arsenal: Acquiring Data Science Skills and Tools

Acquiring essential skills and familiarizing myself with relevant tools played a crucial role in my data science journey. Programming languages like Python and R became my companions, enabling me to manipulate, analyze, and model data efficiently. Additionally, I explored popular data science libraries and frameworks such as TensorFlow, Scikit-learn, Pandas, and NumPy, which expedited the development and deployment of machine learning models. The arsenal of skills and tools I accumulated became my assets in the quest for data-driven insights.

The Real-World Challenge: Applying Data Science in Practice

Data science is not just an academic pursuit but rather a practical discipline aimed at solving real-world problems. Throughout my journey, I sought to identify such problems and apply data science methodologies to provide practical solutions. From predicting customer churn to optimizing supply chain logistics, the application of data science proved transformative in various domains. Sharing success stories of leveraging data science in practice inspires others to realize the power of this field.

Cultivating Curiosity: Continuous Learning and Skill Enhancement

Embracing a growth mindset is paramount in the world of data science. The field is rapidly evolving, with new algorithms, techniques, and tools emerging frequently. To stay ahead, it is essential to cultivate curiosity and foster a continuous learning mindset. Keeping abreast of the latest research papers, attending data science conferences, and engaging in data science courses nurtures personal and professional growth. The journey to becoming a data enthusiast is a lifelong pursuit.

Joining the Community: Networking and Collaboration

Being part of the data science community is a catalyst for growth and inspiration. Engaging with like-minded individuals, sharing knowledge, and collaborating on projects enhances the learning experience. Joining online forums, participating in Kaggle competitions, and attending meetups provides opportunities to exchange ideas, solve challenges collectively, and foster invaluable connections within the data science community.

Overcoming Obstacles: Dealing with Common Data Science Challenges

Data science, like any discipline, presents its own set of challenges. From data cleaning and preprocessing to model selection and evaluation, obstacles arise at each stage of the data science pipeline. Strategies and tips to overcome these challenges, such as building reliable pipelines, conducting robust experiments, and leveraging cross-validation techniques, are indispensable in maintaining motivation and achieving success in the data science journey.

Balancing Act: Building a Career in Data Science alongside Other Commitments

For many aspiring data scientists, the pursuit of knowledge and skills must coexist with other commitments, such as full-time jobs and personal responsibilities. Effectively managing time and developing a structured learning plan is crucial in striking a balance. Tips such as identifying pockets of dedicated learning time, breaking down complex concepts into manageable chunks, and seeking mentorships or online communities can empower individuals to navigate the data science journey while juggling other responsibilities.

Ethical Considerations: Navigating the World of Data Responsibly

As data scientists, we must navigate the world of data responsibly, being mindful of the ethical considerations inherent in this field. Safeguarding privacy, addressing bias in algorithms, and ensuring transparency in data-driven decision-making are critical principles. Exploring topics such as algorithmic fairness, data anonymization techniques, and the societal impact of data science encourages responsible and ethical practices in a rapidly evolving digital landscape.

Embarking on a data science adventure from a curious novice to a passionate data enthusiast is an exhilarating and rewarding journey. By laying a foundation of knowledge, exploring various data science disciplines, acquiring essential skills and tools, and engaging in continuous learning, one can conquer challenges, build a successful career, and have a good influence on the data science community. It's a journey that never truly ends, as data continues to evolve and offer exciting opportunities for discovery and innovation. So, join me in your data science adventure, and let the exploration begin!

#data science#data analytics#data visualization#big data#machine learning#artificial intelligence#education#information

17 notes

·

View notes

Text

SEMANTIC TREE AND AI TECHNOLOGIES

Semantic Tree learning and AI technologies can be combined to solve problems by leveraging the power of natural language processing and machine learning.

Semantic trees are a knowledge representation technique that organizes information in a hierarchical, tree-like structure.

Each node in the tree represents a concept or entity, and the connections between nodes represent the relationships between those concepts.

This structure allows for the representation of complex, interconnected knowledge in a way that can be easily navigated and reasoned about.

CONCEPTS

Semantic Tree: A structured representation where nodes correspond to concepts and edges denote relationships (e.g., hyponyms, hyponyms, synonyms).

Meaning: Understanding the context, nuances, and associations related to words or concepts.

Natural Language Understanding (NLU): AI techniques for comprehending and interpreting human language.

First Principles: Fundamental building blocks or core concepts in a domain.

AI (Artificial Intelligence): AI refers to the development of computer systems that can perform tasks that typically require human intelligence. AI technologies include machine learning, natural language processing, computer vision, and more. These technologies enable computers to understand reason, learn, and make decisions.

Natural Language Processing (NLP): NLP is a branch of AI that focuses on the interaction between computers and human language. It involves the analysis and understanding of natural language text or speech by computers. NLP techniques are used to process, interpret, and generate human languages.

Machine Learning (ML): Machine Learning is a subset of AI that enables computers to learn and improve from experience without being explicitly programmed. ML algorithms can analyze data, identify patterns, and make predictions or decisions based on the learned patterns.

Deep Learning: A subset of machine learning that uses neural networks with multiple layers to learn complex patterns.

EXAMPLES OF APPLYING SEMANTIC TREE LEARNING WITH AI.

1. Text Classification: Semantic Tree learning can be combined with AI to solve text classification problems. By training a machine learning model on labeled data, the model can learn to classify text into different categories or labels. For example, a customer support system can use semantic tree learning to automatically categorize customer queries into different topics, such as billing, technical issues, or product inquiries.

2. Sentiment Analysis: Semantic Tree learning can be used with AI to perform sentiment analysis on text data. Sentiment analysis aims to determine the sentiment or emotion expressed in a piece of text, such as positive, negative, or neutral. By analyzing the semantic structure of the text using Semantic Tree learning techniques, machine learning models can classify the sentiment of customer reviews, social media posts, or feedback.

3. Question Answering: Semantic Tree learning combined with AI can be used for question answering systems. By understanding the semantic structure of questions and the context of the information being asked, machine learning models can provide accurate and relevant answers. For example, a Chabot can use Semantic Tree learning to understand user queries and provide appropriate responses based on the analyzed semantic structure.

4. Information Extraction: Semantic Tree learning can be applied with AI to extract structured information from unstructured text data. By analyzing the semantic relationships between entities and concepts in the text, machine learning models can identify and extract specific information. For example, an AI system can extract key information like names, dates, locations, or events from news articles or research papers.

Python Snippet Codes for Semantic Tree Learning with AI

Here are four small Python code snippets that demonstrate how to apply Semantic Tree learning with AI using popular libraries:

1. Text Classification with scikit-learn:

```python

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.linear_model import LogisticRegression

# Training data

texts = ['This is a positive review', 'This is a negative review', 'This is a neutral review']

labels = ['positive', 'negative', 'neutral']

# Vectorize the text data

vectorizer = TfidfVectorizer()

X = vectorizer.fit_transform(texts)

# Train a logistic regression classifier

classifier = LogisticRegression()

classifier.fit(X, labels)

# Predict the label for a new text

new_text = 'This is a positive sentiment'

new_text_vectorized = vectorizer.transform([new_text])

predicted_label = classifier.predict(new_text_vectorized)

print(predicted_label)

```

2. Sentiment Analysis with TextBlob:

```python

from textblob import TextBlob

# Analyze sentiment of a text

text = 'This is a positive sentence'

blob = TextBlob(text)

sentiment = blob.sentiment.polarity

# Classify sentiment based on polarity

if sentiment > 0:

sentiment_label = 'positive'

elif sentiment < 0:

sentiment_label = 'negative'

else:

sentiment_label = 'neutral'

print(sentiment_label)

```

3. Question Answering with Transformers:

```python

from transformers import pipeline

# Load the question answering model

qa_model = pipeline('question-answering')

# Provide context and ask a question

context = 'The Semantic Web is an extension of the World Wide Web.'

question = 'What is the Semantic Web?'

# Get the answer

answer = qa_model(question=question, context=context)

print(answer['answer'])

```

4. Information Extraction with spaCy:

```python

import spacy

# Load the English language model

nlp = spacy.load('en_core_web_sm')

# Process text and extract named entities

text = 'Apple Inc. is planning to open a new store in New York City.'

doc = nlp(text)

# Extract named entities

entities = [(ent.text, ent.label_) for ent in doc.ents]

print(entities)

```

APPLICATIONS OF SEMANTIC TREE LEARNING WITH AI

Semantic Tree learning combined with AI can be used in various domains and industries to solve problems. Here are some examples of where it can be applied:

1. Customer Support: Semantic Tree learning can be used to automatically categorize and route customer queries to the appropriate support teams, improving response times and customer satisfaction.

2. Social Media Analysis: Semantic Tree learning with AI can be applied to analyze social media posts, comments, and reviews to understand public sentiment, identify trends, and monitor brand reputation.

3. Information Retrieval: Semantic Tree learning can enhance search engines by understanding the meaning and context of user queries, providing more accurate and relevant search results.

4. Content Recommendation: By analyzing the semantic structure of user preferences and content metadata, Semantic Tree learning with AI can be used to personalize content recommendations in platforms like streaming services, news aggregators, or e-commerce websites.

Semantic Tree learning combined with AI technologies enables the understanding and analysis of text data, leading to improved problem-solving capabilities in various domains.

COMBINING SEMANTIC TREE AND AI FOR PROBLEM SOLVING

1. Semantic Reasoning: By integrating semantic trees with AI, systems can engage in more sophisticated reasoning and decision-making. The semantic tree provides a structured representation of knowledge, while AI techniques like natural language processing and knowledge representation can be used to navigate and reason about the information in the tree.

2. Explainable AI: Semantic trees can make AI systems more interpretable and explainable. The hierarchical structure of the tree can be used to trace the reasoning process and understand how the system arrived at a particular conclusion, which is important for building trust in AI-powered applications.

3. Knowledge Extraction and Representation: AI techniques like machine learning can be used to automatically construct semantic trees from unstructured data, such as text or images. This allows for the efficient extraction and representation of knowledge, which can then be used to power various problem-solving applications.

4. Hybrid Approaches: Combining semantic trees and AI can lead to hybrid approaches that leverage the strengths of both. For example, a system could use a semantic tree to represent domain knowledge and then apply AI techniques like reinforcement learning to optimize decision-making within that knowledge structure.

EXAMPLES OF APPLYING SEMANTIC TREE AND AI FOR PROBLEM SOLVING

1. Medical Diagnosis: A semantic tree could represent the relationships between symptoms, diseases, and treatments. AI techniques like natural language processing and machine learning could be used to analyze patient data, navigate the semantic tree, and provide personalized diagnosis and treatment recommendations.

2. Robotics and Autonomous Systems: Semantic trees could be used to represent the knowledge and decision-making processes of autonomous systems, such as self-driving cars or drones. AI techniques like computer vision and reinforcement learning could be used to navigate the semantic tree and make real-time decisions in dynamic environments.

3. Financial Analysis: Semantic trees could be used to model complex financial relationships and market dynamics. AI techniques like predictive analytics and natural language processing could be applied to the semantic tree to identify patterns, make forecasts, and support investment decisions.

4. Personalized Recommendation Systems: Semantic trees could be used to represent user preferences, interests, and behaviors. AI techniques like collaborative filtering and content-based recommendation could be used to navigate the semantic tree and provide personalized recommendations for products, content, or services.

PYTHON CODE SNIPPETS

1. Semantic Tree Construction using NetworkX:

```python

import networkx as nx

import matplotlib.pyplot as plt

# Create a semantic tree

G = nx.DiGraph()

G.add_node("root", label="Root")

G.add_node("concept1", label="Concept 1")

G.add_node("concept2", label="Concept 2")

G.add_node("concept3", label="Concept 3")

G.add_edge("root", "concept1")

G.add_edge("root", "concept2")

G.add_edge("concept2", "concept3")

# Visualize the semantic tree

pos = nx.spring_layout(G)

nx.draw(G, pos, with_labels=True)

plt.show()

```

2. Semantic Reasoning using PyKEEN:

```python

from pykeen.models import TransE

from pykeen.triples import TriplesFactory

# Load a knowledge graph dataset

tf = TriplesFactory.from_path("./dataset/")

# Train a TransE model on the knowledge graph

model = TransE(triples_factory=tf)

model.fit(num_epochs=100)

# Perform semantic reasoning

head = "concept1"

relation = "isRelatedTo"

tail = "concept3"

score = model.score_hrt(head, relation, tail)

print(f"The score for the triple ({head}, {relation}, {tail}) is: {score}")

```

3. Knowledge Extraction using spaCy:

```python

import spacy

# Load the spaCy model

nlp = spacy.load("en_core_web_sm")

# Extract entities and relations from text

text = "The quick brown fox jumps over the lazy dog."

doc = nlp(text)

# Visualize the extracted knowledge

from spacy import displacy

displacy.render(doc, style="ent")

```

4. Hybrid Approach using Ray:

```python

import ray

from ray.rllib.agents.ppo import PPOTrainer

from ray.rllib.env.multi_agent_env import MultiAgentEnv

from ray.rllib.models.tf.tf_modelv2 import TFModelV2

# Define a custom model that integrates a semantic tree

class SemanticTreeModel(TFModelV2):

def __init__(self, obs_space, action_space, num_outputs, model_config, name):

super().__init__(obs_space, action_space, num_outputs, model_config, name)

# Implement the integration of the semantic tree with the neural network

# Define a multi-agent environment that uses the semantic tree model

class SemanticTreeEnv(MultiAgentEnv):

def __init__(self):

self.semantic_tree = # Initialize the semantic tree

self.agents = # Define the agents

def step(self, actions):

# Implement the environment dynamics using the semantic tree

# Train the hybrid model using Ray

ray.init()

config = {

"env": SemanticTreeEnv,

"model": {

"custom_model": SemanticTreeModel,

},

}

trainer = PPOTrainer(config=config)

trainer.train()

```

APPLICATIONS

The combination of semantic trees and AI can be applied to a wide range of problem domains, including:

- Healthcare: Improving medical diagnosis, treatment planning, and drug discovery.

- Finance: Enhancing investment strategies, risk management, and fraud detection.

- Robotics and Autonomous Systems: Enabling more intelligent and adaptable decision-making in complex environments.

- Education: Personalizing learning experiences and providing intelligent tutoring systems.

- Smart Cities: Optimizing urban planning, transportation, and resource management.

- Environmental Conservation: Modeling and predicting environmental changes, and supporting sustainable decision-making.

- Chatbots and Virtual Assistants:

Use semantic trees to understand user queries and provide context-aware responses.

Apply NLU models to extract meaning from user input.

- Information Retrieval:

Build semantic search engines that understand user intent beyond keyword matching.

Combine semantic trees with vector embeddings (e.g., BERT) for better search results.

- Medical Diagnosis:

Create semantic trees for medical conditions, symptoms, and treatments.

Use AI to match patient symptoms to relevant diagnoses.

- Automated Content Generation:

Construct semantic trees for topics (e.g., climate change, finance).

Generate articles, summaries, or reports based on semantic understanding.

RDIDINI PROMPT ENGINEER

#semantic tree#ai solutions#ai-driven#ai trends#ai system#ai model#ai prompt#ml#ai predictions#llm#dl#nlp

3 notes

·

View notes

Text

Cracking the Code: A Beginner's Roadmap to Mastering Data Science

Embarking on the journey into data science as a complete novice is an exciting venture. While the world of data science may seem daunting at first, breaking down the learning process into manageable steps can make the endeavor both enjoyable and rewarding. Choosing the best Data Science Institute can further accelerate your journey into this thriving industry.

In this comprehensive guide, we'll outline a roadmap for beginners to get started with data science, from understanding the basics to building a portfolio of projects.

1. Understanding the Basics: Laying the Foundation

The journey begins with a solid understanding of the fundamentals of data science. Start by familiarizing yourself with key concepts such as data types, variables, and basic statistics. Platforms like Khan Academy, Coursera, and edX offer introductory courses in statistics and data science, providing a solid foundation for your learning journey.

2. Learn Programming Languages: The Language of Data Science

Programming is a crucial skill in data science, and Python is one of the most widely used languages in the field. Platforms like Codecademy, DataCamp, and freeCodeCamp offer interactive lessons and projects to help beginners get hands-on experience with Python. Additionally, learning R, another popular language in data science, can broaden your skill set.

3. Explore Data Visualization: Bringing Data to Life

Data visualization is a powerful tool for understanding and communicating data. Explore tools like Tableau for creating interactive visualizations or dive into Python libraries like Matplotlib and Seaborn. Understanding how to present data visually enhances your ability to derive insights and convey information effectively.

4. Master Data Manipulation: Unlocking Data's Potential

Data manipulation is a fundamental aspect of data science. Learn how to manipulate and analyze data using libraries like Pandas in Python. The official Pandas website provides tutorials and documentation to guide you through the basics of data manipulation, a skill that is essential for any data scientist.

5. Delve into Machine Learning Basics: The Heart of Data Science

Machine learning is a core component of data science. Start exploring the fundamentals of machine learning on platforms like Kaggle, which offers beginner-friendly datasets and competitions. Participating in Kaggle competitions allows you to apply your knowledge, learn from others, and gain practical experience in machine learning.

6. Take Online Courses: Structured Learning Paths

Enroll in online courses that provide structured learning paths in data science. Platforms like Coursera (e.g., "Data Science and Machine Learning Bootcamp with R" or "Applied Data Science with Python") and edX (e.g., "Harvard's Data Science Professional Certificate") offer comprehensive courses taught by experts in the field.

7. Read Books and Blogs: Supplementing Your Knowledge

Books and blogs can provide additional insights and practical tips. "Python for Data Analysis" by Wes McKinney is a highly recommended book, and blogs like Towards Data Science on Medium offer a wealth of articles covering various data science topics. These resources can deepen your understanding and offer different perspectives on the subject.

8. Join Online Communities: Learning Through Connection

Engage with the data science community by joining online platforms like Stack Overflow, Reddit (e.g., r/datascience), and LinkedIn. Participate in discussions, ask questions, and learn from the experiences of others. Being part of a community provides valuable support and insights.

9. Work on Real Projects: Applying Your Skills

Apply your skills by working on real-world projects. Identify a problem or area of interest, find a dataset, and start working on analysis and predictions. Whether it's predicting housing prices, analyzing social media sentiment, or exploring healthcare data, hands-on projects are crucial for developing practical skills.

10. Attend Webinars and Conferences: Staying Updated

Stay updated on the latest trends and advancements in data science by attending webinars and conferences. Platforms like Data Science Central and conferences like the Data Science Conference provide opportunities to learn from experts, discover new technologies, and connect with the wider data science community.

11. Build a Portfolio: Showcasing Your Journey

Create a portfolio showcasing your projects and skills. This can be a GitHub repository or a personal website where you document and present your work. A portfolio is a powerful tool for demonstrating your capabilities to potential employers and collaborators.

12. Practice Regularly: The Path to Mastery

Consistent practice is key to mastering data science. Dedicate regular time to coding, explore new datasets, and challenge yourself with increasingly complex projects. As you progress, you'll find that your skills evolve, and you become more confident in tackling advanced data science challenges.

Embarking on the path of data science as a beginner may seem like a formidable task, but with the right resources and a structured approach, it becomes an exciting and achievable endeavor. From understanding the basics to building a portfolio of real-world projects, each step contributes to your growth as a data scientist. Embrace the learning process, stay curious, and celebrate the milestones along the way. The world of data science is vast and dynamic, and your journey is just beginning. Choosing the best Data Science courses in Chennai is a crucial step in acquiring the necessary expertise for a successful career in the evolving landscape of data science.

3 notes

·

View notes

Text

Advanced Crypto Investment Analysis Strategies for 2025 – A Deep Dive by Academy Darkex

As we step into 2025, the landscape of digital assets continues to mature, demanding more sophisticated strategies from investors. Gone are the days when a simple buy-and-hold method could yield exponential gains. Today, success in the market hinges on deep, data-driven insights and strategic execution. At Academy Darkex, we believe that empowering investors with advanced crypto investment analysis tools and methodologies is the key to long-term success in this evolving space.

In this blog, we explore cutting-edge strategies in crypto investment analysis tailored for the high-stakes environment of 2025.

1. On-Chain Data Analysis – Decoding Blockchain Behavior

One of the most potent trends redefining crypto investment analysis is the use of on-chain metrics. In 2025, investors are leveraging tools that analyze wallet movements, miner behavior, staking trends, and whale activity to predict market sentiment. Platforms like Glassnode, Nansen, and IntoTheBlock are no longer optional—they're essential.

Academy Darkex Insight: Monitoring wallet outflows from centralized exchanges often precedes bullish price action. Our students learn how to track these flows in real-time for predictive trading decisions.

2. AI-Powered Predictive Models

Machine learning and AI have moved from buzzwords to foundational tools in crypto investment analysis. Predictive models analyze historical price movements, news sentiment, and macroeconomic factors to forecast market trends with impressive accuracy.

Strategy Highlight: Academy Darkex trains users on creating custom AI models using Python and TensorFlow to generate buy/sell signals based on dynamic datasets.

3. Cross-Market Correlation Analysis

Understanding how crypto interacts with traditional markets (stocks, commodities, interest rates) has become vital. In 2025, advanced investors apply correlation matrices and regression models to assess how events like Fed rate hikes or oil price swings affect crypto volatility.

Pro Tip: At Academy Darkex, we provide weekly macro-crypto correlation reports to help our members spot trends before they hit the mainstream.

4. DeFi Metrics & Protocol Health Indicators

With the explosion of DeFi, analyzing protocol fundamentals—such as total value locked (TVL), yield sustainability, and governance activity—has become integral to any robust crypto investment analysis framework.

Academy Darkex Masterclass: Learn how to identify protocol stress signals, such as liquidity drains or governance disputes, before they impact token value.

5. Sentiment and Narrative Tracking

In 2025, crypto is as much about psychology as it is about math. Advanced sentiment analysis tools now scrape Reddit, X (formerly Twitter), Discord, and news sites to detect emerging narratives and potential FOMO or FUD events.

Edge for Investors: Academy Darkex equips traders with dashboards that integrate sentiment scores directly into their investment models for early detection of trend reversals.

Final Thoughts

Crypto investment analysis is no longer a niche skill—it’s a necessity. At Academy Darkex, we stand at the forefront of crypto education, offering our community the strategies, tools, and training required to thrive in 2025’s complex digital asset market.

Whether you're a seasoned investor or an ambitious beginner, now is the time to level up your analytical game. Dive into the future of finance with Academy Darkex and make every data point work for your portfolio.

#crypto investment analysis#AcademyDarkex#OnChainAnalysis#CryptoEducation#BlockchainAnalytics#CryptoMarketTrends

0 notes

Text

Tips for Breaking into the AI Cloud Industry

Think of a single AI system that processes over 160 billion transactions annually, identifying fraudulent activities within milliseconds. This is not a futuristic concept but a current reality at Mastercard, where AI-driven solutions have significantly enhanced fraud detection capabilities. Their flagship system, Decision Intelligence, assigns risk scores to transactions in real time, effectively safeguarding consumers from unauthorized activities.

In the healthcare sector, organizations like Humana have leveraged AI to detect and prevent fraudulent claims. By analyzing thousands of claims daily, their AI-powered fraud detection system has eliminated potential fraudulent actions worth over $10 million in its first year. (ClarionTech)

These examples underscore the transformative impact of AI cloud systems across various industries. As businesses continue to adopt these technologies, the demand for professionals skilled in both AI and cloud computing is surging. To meet this demand, individuals are turning to specialized certifications.

Because of this, certifications such as the AWS AI Certification, Azure AI Certification, and Google Cloud AI Certification are becoming essential credentials for those looking to excel in this field. These programs provide comprehensive training in deploying and managing AI solutions on respective cloud platforms. Thus equipping professionals with the necessary skills to navigate the evolving technological landscape.

For those aspiring to enter this dynamic industry, it’s crucial to learn AI cloud systems and enroll in AI cloud training programs that offer practical, hands-on experience. By doing so, professionals can position themselves at the forefront of innovation, ready to tackle challenges and drive progress in the AI cloud domain.

If you’re looking to break into the AI cloud industry, you’re on the right track. This guide shares real-world tips to help you land your dream role, with insights on what to learn, which AI cloud certifications to pursue, and how to stand out in a rapidly evolving tech space.

1. Understand the AI Cloud Ecosystem

Before diving in, it’s critical to understand what the AI cloud ecosystem looks like.

At its core, the industry is powered by major players like Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP). These platforms offer the infrastructure, tools, and APIs needed to train, deploy, and manage AI models at scale.

Companies are increasingly looking for professionals who can learn AI cloud systems and use them to deliver results. It could be for deploying a machine learning model to recognize medical images or training a large language model for customer support automation.

2. Build a Strong Foundation in AI and Cloud

You don’t need a Ph.D. to get started, but you do need foundational knowledge. Here’s what you should focus on:

Programming Languages: Python is essential for AI, while JavaScript, Java, and Go are common in cloud environments.

Mathematics & Algorithms: A solid grasp of linear algebra, statistics, and calculus helps you understand how AI models work.

Cloud Fundamentals: Learn how storage, compute, containers (like Kubernetes), and serverless functions work in cloud ecosystems.

Free resources like IBM SkillsBuild and Coursera offer solid entry-level courses. But if you’re serious about leveling up, it’s time to enroll in AI cloud training that’s tailored to real-world applications.

3. Get Hands-On with Projects

Theory alone won’t get you hired—practical experience is the key. Build personal projects that show your ability to apply AI to solve real-world problems.

For example:

Use Google Cloud AI to deploy a sentiment analysis tool.

Train an image recognition model using AWS SageMaker.

Build a chatbot with Azure’s Cognitive Services.

Share your work on GitHub and LinkedIn. Recruiters love candidates who not only understand the tools but can demonstrate how they have used them.

4. Earn an AI Cloud Certification That Counts

One of the most impactful things you can do for your career is to earn a recognized AI cloud certification. These credentials show employers that you have the technical skills to hit the ground running.

Here are three standout certifications to consider:

AWS AI Certification – Ideal if you’re working with services like SageMaker, Rekognition, or Lex. It’s great for machine learning engineers and data scientists.

Azure AI Certification – This credential is best if you’re deploying AI through Microsoft tools, such as Azure Machine Learning, Bot Services, or Form Recognizer.

Google Cloud AI Certification – This one validates your ability to design and build ML models using Vertex AI and TensorFlow on GCP.

These certifications not only sharpen your skills but also significantly boost your resume. Many employers now prefer or even require an AI cloud certification for roles in AI engineering and data science.

5. Stay Current with Industry Trends

The AI cloud field changes quickly. New tools, platforms, and best practices emerge almost monthly. Stay informed by:

Following blogs by AWS, Google Cloud, and Microsoft

Joining LinkedIn groups and Reddit communities focused on AI and cloud

Attending free webinars and local meetups

For example, Nvidia recently introduced DGX Cloud Lepton—a new service aimed at making high-powered GPUs more accessible for developers via the cloud. Understanding innovations like this keeps you ahead of the curve.

6. Network Like Your Career Depends on It (Because It Does)

Many people underestimate the power of networking in the tech industry. Join forums, attend AI meetups, and don’t be afraid to slide into a LinkedIn DM to ask someone about their job in the AI cloud space.

Even better, start building your brand by sharing what you’re learning. Write LinkedIn posts, Medium articles, or even record YouTube tutorials. This not only reinforces your knowledge but also makes you more visible to potential employers and collaborators.

7. Ace the Interview Process

You’ve done the training, the certs, and built a few cool projects—now it’s time to land the job.

AI cloud interviews usually include:

Technical assessments (coding, cloud architecture, model evaluation)

Case studies (e.g., “How would you build a recommendation engine on GCP?”)

Behavioral interviews to assess team fit and communication skills

Prepare by practicing problems on HackerRank or LeetCode, and be ready to talk about your projects and certifications in depth. Showing off your Google Cloud AI certification, for instance, is impressive, but tying it back to a project where you built and deployed a real-world application? That’s what seals the deal.

Start Small, Think Big

Breaking into the AI cloud industry might feel intimidating, but remember: everyone starts somewhere. The important thing is to start.

Learn AI cloud systems by taking free courses.

Enroll in AI cloud training that offers hands-on labs and practical projects.

Earn an AI cloud certification—whether it’s AWS AI Certification, Azure AI Certification, or Google Cloud AI Certification.

And most importantly, stay curious, stay consistent, and keep building.

There’s never been a better time to start your journey. Begin with AI CERTs! Consider checking the AI+ Cloud Certification, if you’re serious about building a future-proof career at the intersection of artificial intelligence and cloud computing. This certification is designed for professionals who want to master real-world AI applications on platforms like AWS, Azure, and Google Cloud.

Enroll today!

0 notes

Text

Best AI Training in Electronic City, Bangalore – Become an AI Expert & Launch a Future-Proof Career!

Artificial Intelligence (AI) is reshaping industries and driving the future of technology. Whether it's automating tasks, building intelligent systems, or analyzing big data, AI has become a key career path for tech professionals. At eMexo Technologies, we offer a job-oriented AI Certification Course in Electronic City, Bangalore tailored for both beginners and professionals aiming to break into or advance within the AI field.

Our training program provides everything you need to succeed—core knowledge, hands-on experience, and career-focused guidance—making us a top choice for AI Training in Electronic City, Bangalore.

🌟 Who Should Join This AI Course in Electronic City, Bangalore?

This AI Course in Electronic City, Bangalore is ideal for:

Students and Freshers seeking to launch a career in Artificial Intelligence

Software Developers and IT Professionals aiming to upskill in AI and Machine Learning

Data Analysts, System Engineers, and tech enthusiasts moving into the AI domain

Professionals preparing for certifications or transitioning to AI-driven job roles

With a well-rounded curriculum and expert mentorship, our course serves learners across various backgrounds and experience levels.

📘 What You Will Learn in the AI Certification Course

Our AI Certification Course in Electronic City, Bangalore covers the most in-demand tools and techniques. Key topics include:

Foundations of AI: Core AI principles, machine learning, deep learning, and neural networks

Python for AI: Practical Python programming tailored to AI applications

Machine Learning Models: Learn supervised, unsupervised, and reinforcement learning techniques

Deep Learning Tools: Master TensorFlow, Keras, OpenCV, and other industry-used libraries

Natural Language Processing (NLP): Build projects like chatbots, sentiment analysis tools, and text processors

Live Projects: Apply knowledge to real-world problems such as image recognition and recommendation engines

All sessions are conducted by certified professionals with real-world experience in AI and Machine Learning.

🚀 Why Choose eMexo Technologies – The Best AI Training Institute in Electronic City, Bangalore

eMexo Technologies is not just another AI Training Center in Electronic City, Bangalore—we are your AI career partner. Here's what sets us apart as the Best AI Training Institute in Electronic City, Bangalore:

✅ Certified Trainers with extensive industry experience ✅ Fully Equipped Labs and hands-on real-time training ✅ Custom Learning Paths to suit your individual career goals ✅ Career Services like resume preparation and mock interviews ✅ AI Training Placement in Electronic City, Bangalore with 100% placement support ✅ Flexible Learning Modes including both classroom and online options

We focus on real skills that employers look for, ensuring you're not just trained—but job-ready.

🎯 Secure Your Future with the Leading AI Training Institute in Electronic City, Bangalore

The demand for skilled AI professionals is growing rapidly. By enrolling in our AI Certification Course in Electronic City, Bangalore, you gain the tools, confidence, and guidance needed to thrive in this cutting-edge field. From foundational concepts to advanced applications, our program prepares you for high-demand roles in AI, Machine Learning, and Data Science.

At eMexo Technologies, our mission is to help you succeed—not just in training but in your career.

📞 Call or WhatsApp: +91-9513216462 📧 Email: [email protected] 🌐 Website: https://www.emexotechnologies.com/courses/artificial-intelligence-certification-training-course/

Seats are limited – Enroll now in the most trusted AI Training Institute in Electronic City, Bangalore and take the first step toward a successful AI career.

🔖 Popular Hashtags:

#AITrainingInElectronicCityBangalore#AICertificationCourseInElectronicCityBangalore#AICourseInElectronicCityBangalore#AITrainingCenterInElectronicCityBangalore#AITrainingInstituteInElectronicCityBangalore#BestAITrainingInstituteInElectronicCityBangalore#AITrainingPlacementInElectronicCityBangalore#MachineLearning#DeepLearning#AIWithPython#AIProjects#ArtificialIntelligenceTraining#eMexoTechnologies#FutureTechSkills#ITTrainingBangalore

2 notes

·

View notes

Text

Data Science Trending in 2025

What is Data Science?

Data Science is an interdisciplinary field that combines scientific methods, processes, algorithms, and systems to extract knowledge and insights from structured and unstructured data. It is a blend of various tools, algorithms, and machine learning principles with the goal to discover hidden patterns from raw data.

Introduction to Data Science

In the digital era, data is being generated at an unprecedented scale—from social media interactions and financial transactions to IoT sensors and scientific research. This massive amount of data is often referred to as "Big Data." Making sense of this data requires specialized techniques and expertise, which is where Data Science comes into play.

Data Science enables organizations and researchers to transform raw data into meaningful information that can help make informed decisions, predict trends, and solve complex problems.

History and Evolution

The term "Data Science" was first coined in the 1960s, but the field has evolved significantly over the past few decades, particularly with the rise of big data and advancements in computing power.

Early days: Initially, data analysis was limited to simple statistical methods.

Growth of databases: With the emergence of databases, data management and retrieval improved.

Rise of machine learning: The integration of algorithms that can learn from data added a predictive dimension.

Big Data Era: Modern data science deals with massive volumes, velocity, and variety of data, leveraging distributed computing frameworks like Hadoop and Spark.

Components of Data Science

1. Data Collection and Storage

Data can come from multiple sources:

Databases (SQL, NoSQL)

APIs

Web scraping

Sensors and IoT devices

Social media platforms

The collected data is often stored in data warehouses or data lakes.

2. Data Cleaning and Preparation

Raw data is often messy—containing missing values, inconsistencies, and errors. Data cleaning involves:

Handling missing or corrupted data

Removing duplicates

Normalizing and transforming data into usable formats

3. Exploratory Data Analysis (EDA)

Before modeling, data scientists explore data visually and statistically to understand its main characteristics. Techniques include:

Summary statistics (mean, median, mode)

Data visualization (histograms, scatter plots)

Correlation analysis

4. Data Modeling and Machine Learning

Data scientists apply statistical models and machine learning algorithms to:

Identify patterns

Make predictions

Classify data into categories

Common models include regression, decision trees, clustering, and neural networks.

5. Interpretation and Communication

The results need to be interpreted and communicated clearly to stakeholders. Visualization tools like Tableau, Power BI, or matplotlib in Python help convey insights effectively.

Techniques and Tools in Data Science

Statistical Analysis

Foundational for understanding data properties and relationships.

Machine Learning

Supervised and unsupervised learning for predictions and pattern recognition.

Deep Learning

Advanced neural networks for complex tasks like image and speech recognition.

Natural Language Processing (NLP)

Techniques to analyze and generate human language.

Big Data Technologies

Hadoop, Spark, Kafka for handling massive datasets.

Programming Languages

Python: The most popular language due to its libraries like pandas, NumPy, scikit-learn.

R: Preferred for statistical analysis.

SQL: For database querying.

Applications of Data Science

Data Science is used across industries:

Healthcare: Predicting disease outbreaks, personalized medicine, medical image analysis.

Finance: Fraud detection, credit scoring, algorithmic trading.

Marketing: Customer segmentation, recommendation systems, sentiment analysis.

Manufacturing: Predictive maintenance, supply chain optimization.

Transportation: Route optimization, autonomous vehicles.

Entertainment: Content recommendation on platforms like Netflix and Spotify.

Challenges in Data Science

Data Quality: Poor data can lead to inaccurate results.

Data Privacy and Ethics: Ensuring responsible use of data and compliance with regulations.

Skill Gap: Requires multidisciplinary knowledge in statistics, programming, and domain expertise.

Scalability: Handling and processing vast amounts of data efficiently.

Future of Data Science

The future promises further integration of artificial intelligence and automation in data science workflows. Explainable AI, augmented analytics, and real-time data processing are areas of rapid growth.

As data continues to grow exponentially, the importance of data science in guiding strategic decisions and innovation across sectors will only increase.

Conclusion

Data Science is a transformative field that unlocks the power of data to solve real-world problems. Through a combination of techniques from statistics, computer science, and domain knowledge, data scientists help organizations make smarter decisions, innovate, and gain a competitive edge.

Whether you are a student, professional, or business leader, understanding data science and its potential can open doors to exciting opportunities and advancements in technology and society.

0 notes

Text

A Comprehensive Guide to Scraping DoorDash Restaurant and Menu Data

Introduction

Absolutely! Data is everything; it matters to any food delivery business that is trying to optimize price, look into customer preferences, and be aware of market trends. Web Scraping DoorDash restaurant Data allows one to bring his business a step closer to extracting valuable information from the platform, an invaluable competitor in the food delivery space.

This is going to be your complete guide walkthrough over DoorDash Menu Data Scraping, how to efficiently Scrape DoorDash Food Delivery Data, and the tools required to scrape DoorDash Restaurant Data successfully.

Why Scrape DoorDash Restaurant and Menu Data?

Market Research & Competitive Analysis: Gaining insights into competitor pricing, popular dishes, and restaurant performance helps businesses refine their strategies.

Restaurant Performance Evaluation: DoorDash Restaurant Data Analysis allows businesses to monitor ratings, customer reviews, and service efficiency.

Menu Optimization & Price Monitoring: Tracking menu prices and dish popularity helps restaurants and food aggregators optimize their offerings.

Customer Sentiment & Review Analysis: Scraping DoorDash reviews provides businesses with insights into customer preferences and dining trends.

Delivery Time & Logistics Insights: Analyzing delivery estimates, peak hours, and order fulfillment data can improve logistics and delivery efficiency.

Legal Considerations of DoorDash Data Scraping

Before proceeding, it is crucial to consider the legal and ethical aspects of web scraping.

Key Considerations:

Respect DoorDash’s Robots.txt File – Always check and comply with their web scraping policies.

Avoid Overloading Servers – Use rate-limiting techniques to avoid excessive requests.

Ensure Ethical Data Use – Extracted data should be used for legitimate business intelligence and analytics.

Setting Up Your DoorDash Data Scraping Environment

To successfully Scrape DoorDash Food Delivery Data, you need the right tools and frameworks.

1. Programming Languages

Python – The most commonly used language for web scraping.

JavaScript (Node.js) – Effective for handling dynamic pages.

2. Web Scraping Libraries

BeautifulSoup – For extracting HTML data from static pages.

Scrapy – A powerful web crawling framework.

Selenium – Used for scraping dynamic JavaScript-rendered content.

Puppeteer – A headless browser tool for interacting with complex pages.

3. Data Storage & Processing

CSV/Excel – For small-scale data storage and analysis.

MySQL/PostgreSQL – For managing large datasets.

MongoDB – NoSQL storage for flexible data handling.

Step-by-Step Guide to Scraping DoorDash Restaurant and Menu Data

Step 1: Understanding DoorDash’s Website Structure

DoorDash loads data dynamically using AJAX, requiring network request analysis using Developer Tools.

Step 2: Identify Key Data Points

Restaurant name, location, and rating

Menu items, pricing, and availability

Delivery time estimates

Customer reviews and sentiments

Step 3: Extract Data Using Python

Using BeautifulSoup for Static Dataimport requests from bs4 import BeautifulSoup url = "https://www.doordash.com/restaurants" headers = {"User-Agent": "Mozilla/5.0"} response = requests.get(url, headers=headers) soup = BeautifulSoup(response.text, "html.parser") restaurants = soup.find_all("div", class_="restaurant-name") for restaurant in restaurants: print(restaurant.text)

Using Selenium for Dynamic Contentfrom selenium import webdriver from selenium.webdriver.common.by import By from selenium.webdriver.chrome.service import Service service = Service("path_to_chromedriver") driver = webdriver.Chrome(service=service) driver.get("https://www.doordash.com") restaurants = driver.find_elements(By.CLASS_NAME, "restaurant-name") for restaurant in restaurants: print(restaurant.text) driver.quit()

Step 4: Handling Anti-Scraping Measures

Use rotating proxies (ScraperAPI, BrightData).

Implement headless browsing with Puppeteer or Selenium.

Randomize user agents and request headers.

Step 5: Store and Analyze the Data

Convert extracted data into CSV or store it in a database for advanced analysis.import pandas as pd data = {"Restaurant": ["ABC Cafe", "XYZ Diner"], "Rating": [4.5, 4.2]} df = pd.DataFrame(data) df.to_csv("doordash_data.csv", index=False)

Analyzing Scraped DoorDash Data

1. Price Comparison & Market Analysis

Compare menu prices across different restaurants to identify trends and pricing strategies.

2. Customer Reviews Sentiment Analysis

Utilize NLP to analyze customer feedback and satisfaction.from textblob import TextBlob review = "The delivery was fast and the food was great!" sentiment = TextBlob(review).sentiment.polarity print("Sentiment Score:", sentiment)

3. Delivery Time Optimization

Analyze delivery time patterns to improve efficiency.

Challenges & Solutions in DoorDash Data Scraping

ChallengeSolutionDynamic Content LoadingUse Selenium or PuppeteerCAPTCHA RestrictionsUse CAPTCHA-solving servicesIP BlockingImplement rotating proxiesData Structure ChangesRegularly update scraping scripts

Ethical Considerations & Best Practices

Follow robots.txt guidelines to respect DoorDash’s policies.

Implement rate-limiting to prevent excessive server requests.

Avoid using data for fraudulent or unethical purposes.

Ensure compliance with data privacy regulations (GDPR, CCPA).

Conclusion

DoorDash Data Scraping is competent enough to provide an insight for market research, pricing analysis, and customer sentiment tracking. With the right means, methodologies, and ethical guidelines, an organization can use Scrape DoorDash Food Delivery Data to drive data-based decisions.

For automated and efficient extraction of DoorDash food data, one can rely on CrawlXpert, a reliable web scraping solution provider.

Are you ready to extract DoorDash data? Start crawling now using the best provided by CrawlXpert!

Know More : https://www.crawlxpert.com/blog/scraping-doordash-restaurant-and-menu-data

0 notes

Text

Career Scope After Completing an Artificial Intelligence Classroom Course in Bengaluru

Artificial Intelligence (AI) has rapidly evolved from a futuristic concept into a critical component of modern technology. As businesses and industries increasingly adopt AI-powered solutions, the demand for skilled professionals in this domain continues to rise. If you're considering a career in AI and are located in India’s tech capital, enrolling in an Artificial Intelligence Classroom Course in Bengaluru could be your best career decision.

This article explores the career opportunities that await you after completing an AI classroom course in Bengaluru, the industries hiring AI talent, and how classroom learning gives you an edge in the job market.

Why Choose an Artificial Intelligence Classroom Course in Bengaluru?

1. Access to India’s AI Innovation Hub

Bengaluru is often called the "Silicon Valley of India" and is home to top tech companies, AI startups, global R&D centers, and prestigious academic institutions. Studying AI in Bengaluru means you’re surrounded by innovation, mentorship, and career opportunities from day one.

2. Industry-Aligned Curriculum

Most reputed institutions offering an Artificial Intelligence Classroom Course in Bengaluru ensure that their curriculum is tailored to industry standards. You gain hands-on experience in tools like Python, TensorFlow, PyTorch, and cloud platforms like AWS or Azure, giving you a competitive edge.

3. In-Person Mentorship & Networking

Unlike online courses, classroom learning offers direct interaction with faculty and peers, live doubt-clearing sessions, group projects, hackathons, and job fairs—all of which significantly boost employability.

What Will You Learn in an AI Classroom Course?

Before we delve into the career scope, let’s understand the core competencies you’ll develop during an Artificial Intelligence Classroom Course in Bengaluru:

Python Programming & Data Structures

Machine Learning & Deep Learning Algorithms

Natural Language Processing (NLP)

Computer Vision

Big Data & Cloud Integration

Model Deployment and MLOps

AI Ethics and Responsible AI Practices

Hands-on experience with real-world projects ensures that you not only understand theoretical concepts but also apply them in practical business scenarios.

Career Scope After Completing an AI Classroom Course

1. Machine Learning Engineer

One of the most in-demand roles today, ML Engineers design and implement algorithms that enable machines to learn from data. With a strong foundation built during your course, you’ll be qualified to work on predictive models, recommendation systems, and autonomous systems.

Salary Range in Bengaluru: ₹8 LPA to ₹22 LPA Top Hiring Companies: Google, Flipkart, Amazon, Mu Sigma, IBM Research Lab

2. AI Research Scientist

If you have a knack for academic research and innovation, this role allows you to work on cutting-edge AI advancements. Research scientists often work in labs developing new models, improving algorithm efficiency, or working on deep neural networks.

Salary Range: ₹12 LPA to ₹30+ LPA Top Employers: Microsoft Research, IISc Bengaluru, Bosch, OpenAI India, Samsung R&D

3. Data Scientist

AI and data science go hand in hand. Data scientists use machine learning algorithms to analyze and interpret complex data, build models, and generate actionable insights.

Salary Range: ₹10 LPA to ₹25 LPA Hiring Sectors: Fintech, eCommerce, Healthcare, EdTech, Logistics

4. Computer Vision Engineer

With industries adopting automation and facial recognition, computer vision engineers are in high demand. From working on surveillance systems to autonomous vehicles and medical imaging, this career path is both versatile and future-proof.

Salary Range: ₹9 LPA to ₹20 LPA Popular Employers: Nvidia, Tata Elxsi, Qualcomm, Zoho AI

5. Natural Language Processing (NLP) Engineer

NLP is at the core of chatbots, language translators, and sentiment analysis tools. As companies invest in better human-computer interaction, the demand for NLP engineers continues to rise.

Salary Range: ₹8 LPA to ₹18 LPA Top Recruiters: TCS AI Lab, Adobe India, Razorpay, Haptik

6. AI Product Manager

With your AI knowledge, you can move into managerial roles and lead AI-based product development. These professionals bridge the gap between the technical team and business goals.

Salary Range: ₹18 LPA to ₹35+ LPA Companies Hiring: Swiggy, Ola Electric, Urban Company, Freshworks

7. AI Consultant

AI consultants work with multiple clients to assess their needs and implement AI solutions for business growth. This career often involves travel, client interaction, and cross-functional knowledge.

Salary Range: ₹12 LPA to ₹28 LPA Best Suited For: Professionals with prior work experience and communication skills

Certifications and Placements

Many reputed institutions like Boston Institute of Analytics (BIA) offer AI classroom courses in Bengaluru with:

Globally Recognized Certifications

Live Industry Projects

Placement Support with 90%+ Success Rate

Interview Preparation & Resume Building Sessions

Graduates of such courses have gone on to work at top tech firms, startups, and even international research labs.

Final Thoughts

Bengaluru’s tech ecosystem provides an unmatched environment for aspiring AI professionals. Completing an Artificial Intelligence Classroom Course in Bengaluru equips you with the skills, exposure, and confidence to enter high-paying, impactful roles across various industries.

Whether you're a student, IT professional, or career switcher, this classroom course can be your gateway to a future-proof career in one of the world’s most transformative technologies. The real-world projects, in-person mentorship, and direct industry exposure you gain in Bengaluru will set you apart in a competitive job market.

#Best Data Science Courses in Bengaluru#Artificial Intelligence Course in Bengaluru#Data Scientist Course in Bengaluru#Machine Learning Course in Bengaluru

0 notes

Text

Finding the Right Prompt Engineer: Skills, Traits, and More

As artificial intelligence (AI) continues to revolutionize industries, the demand for specialized roles like prompt engineers has skyrocketed. In particular, businesses working with AI-powered platforms, such as language models, need experts who can effectively design and optimize prompts to achieve accurate and relevant results. So, what should you look for when you hire prompt engineers? Understanding the essential skills, experience, and mindset required for this role is key to ensuring you find the right fit for your project.

Prompt engineering is the art and science of crafting inputs to AI models that guide the model to generate desired outputs. It's a crucial skill when leveraging large language models like GPT-3, GPT-4, or other AI-driven tools for specific tasks, such as content generation, automation, or conversational interfaces. As AI technology becomes more sophisticated, so does the need for skilled professionals who can design these prompts in a way that enhances performance and accuracy.

In this blog post, we will explore the key factors to consider when hiring a prompt engineer, including technical expertise, creativity, and practical experience. Understanding these factors can help you make an informed decision that will contribute to the success of your AI-driven initiatives.

Key Skills and Qualifications for a Prompt Engineer

Strong Understanding of AI and Machine Learning Models

First and foremost, a prompt engineer must have a deep understanding of how AI models, particularly language models, work. They should be well-versed in the theory behind natural language processing (NLP) and how AI systems interpret and respond to different types of input. This knowledge is critical because the effectiveness of the prompts hinges on how well the engineer understands the AI's capabilities and limitations.

A background in AI, machine learning, or a related field is often essential for prompt engineers. Ideally, the candidate should have experience working with models such as OpenAI's GPT, Google's BERT, or other transformer-based models. This understanding helps the engineer tailor prompts to achieve specific outcomes and improve the model's efficiency and relevance in various contexts.

Experience with Data and Text Analysis

Another vital skill is the ability to analyze and interpret large volumes of text data. A prompt engineer should be able to identify patterns, trends, and nuances in text to create prompts that will extract the right kind of response from an AI model. Whether the goal is to generate content, conduct sentiment analysis, or automate a process, understanding how to structure data for the model is key to delivering high-quality outputs.

The ability to process and analyze textual data often goes hand-in-hand with a good command of programming languages such as Python, which is commonly used for text processing and working with machine learning libraries. Familiarity with libraries like TensorFlow or Hugging Face can be an added advantage.

Creativity and Problem-Solving Abilities

While prompt engineering may seem like a highly technical role, creativity plays a significant part. A good prompt engineer needs to think creatively about how to approach problems and design prompts that generate relevant, meaningful, and often innovative responses. This requires a balance of logic and imagination, as engineers must continually experiment with different prompt variations to find the most effective one.

A strong problem-solving mindset is necessary to optimize prompt performance, especially when working with models that are not perfect and may produce unexpected results. The best prompt engineers know how to tweak inputs, fine-tune instructions, and adjust formatting to guide the AI model toward desired outcomes.

Communication Skills and Collaboration

Although prompt engineers often work with AI, they must also work closely with other team members, including developers, designers, and project managers. Effective communication is critical when collaborating on a project. A prompt engineer should be able to explain complex technical concepts in an easy-to-understand manner, ensuring that stakeholders are aligned and understand the capabilities and limitations of the AI system.